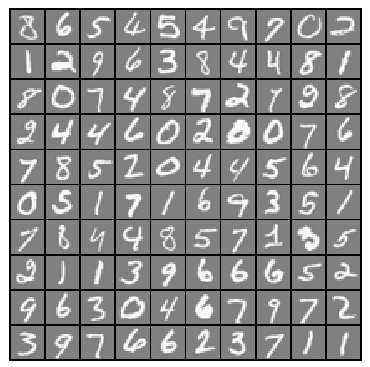

> 2021年05月10日信息消化 ### Machine Learning: Programming Exercise 3 ##### Visualizing the data ```matlab % Load saved matrices from file load('ex3data1.mat'); % The matrices X and y will now be in your MATLAB environment m = size(X, 1); % Randomly select 100 data points to display rand_indices = randperm(m); sel = X(rand_indices(1:100), :); displayData(sel); ```  randperm(N) 返回一个包含随机排列的向量。 #### Vectorizing regularized logistic regression 现在修改你在lrCostFunction中的代码,以考虑到正则化。另外,你不应该在你的代码中加入任何循环。当你完成后,运行下面的代码来测试你的矢量实现,并与预期的输出进行比较。 > Now modify your code in lrCostFunction to account for regularization. Once again, you should not put any loops into your code. When you are finished, run the code below to test your vectorized implementation and compare to expected outputs: ```matlab theta_t = [-2; -1; 1; 2]; X_t = [ones(5,1) reshape(1:15,5,3)/10]; y_t = ([1;0;1;0;1] >= 0.5); lambda_t = 3; [J, grad] = lrCostFunction(theta_t, X_t, y_t, lambda_t); fprintf('Cost: %f | Expected cost: 2.534819\n',J); ``` - lrCostFunction.m 这个看着需求很眼熟....逻辑回归,正规化....直接copy了ex2的costFunciont的代码提交通过了。。没检查loop 无loop实现参考:[coursera-machine-learning/machine-learning-ex3/ex3/lrCostFunction.m](https://github.com/benoitvallon/coursera-machine-learning/blob/master/machine-learning-ex3/ex3/lrCostFunction.m) ```matlab function [J, grad] = lrCostFunction(theta, X, y, lambda) %LRCOSTFUNCTION Compute cost and gradient for logistic regression with %regularization % J = LRCOSTFUNCTION(theta, X, y, lambda) computes the cost of using % theta as the parameter for regularized logistic regression and the % gradient of the cost w.r.t. to the parameters. % Initialize some useful values m = length(y); % number of training examples % You need to return the following variables correctly J = 0; grad = zeros(size(theta)); % ====================== YOUR CODE HERE ====================== % Instructions: Compute the cost of a particular choice of theta. % You should set J to the cost. % Compute the partial derivatives and set grad to the partial % derivatives of the cost w.r.t. each parameter in theta % % Hint: The computation of the cost function and gradients can be % efficiently vectorized. For example, consider the computation % % sigmoid(X * theta) % % Each row of the resulting matrix will contain the value of the % prediction for that example. You can make use of this to vectorize % the cost function and gradient computations. % % Hint: When computing the gradient of the regularized cost function, % there're many possible vectorized solutions, but one solution % looks like: % grad = (unregularized gradient for logistic regression) % temp = theta; % temp(1) = 0; % because we don't add anything for j = 0 % grad = grad + YOUR_CODE_HERE (using the temp variable) % sigmo = sigmoid(X * theta); Jreg = lambda / (2 * m) * (theta' * theta - theta(1) .^2); J = 1 / m * (-y' * log(sigmo) - (1 - y)' * log(1 - sigmo)) + Jreg; temp = theta; temp(1) = 0; gradreg = lambda / m * temp; grad = 1 / m * X' * (sigmo - y) + gradreg; % ============================================================= grad = grad(:); end ``` #### One-vs-all classication MATLAB Tip: Logical arrays in MATLAB are arrays which contain binary (0 or 1) elements. In MATLAB, evaluating the expression a == b for a vector a (of size ) and scalar b will return a vector of the same size as a with ones at positions where the elements of a are equal to b and zeroes where they are different. To see how this works for yourself, run the following code: ```matlab a = 1:10; % Create a and b b = 3; disp(a == b) % You should try different values of b here ``` Furthermore, you will be using fmincg for this exercise (instead of fminunc). fmincg works similarly to fminunc, but is more more efficient for dealing with a large number of parameters. After you have correctly completed the code for oneVsAll.m, run the code below to use your oneVsAll function to train a multi-class classifier. ```matlab num_labels = 10; % 10 labels, from 1 to 10 lambda = 0.1; [all_theta] = oneVsAll(X, y, num_labels, lambda); ``` - oneVsAll.m ```matlab function [all_theta] = oneVsAll(X, y, num_labels, lambda) %ONEVSALL trains multiple logistic regression classifiers and returns all %the classifiers in a matrix all_theta, where the i-th row of all_theta %corresponds to the classifier for label i % [all_theta] = ONEVSALL(X, y, num_labels, lambda) trains num_labels % logistic regression classifiers and returns each of these classifiers % in a matrix all_theta, where the i-th row of all_theta corresponds % to the classifier for label i % Some useful variables m = size(X, 1); n = size(X, 2); % You need to return the following variables correctly all_theta = zeros(num_labels, n + 1); % Add ones to the X data matrix X = [ones(m, 1) X]; % ====================== YOUR CODE HERE ====================== % Instructions: You should complete the following code to train num_labels % logistic regression classifiers with regularization % parameter lambda. % % Hint: theta(:) will return a column vector. % % Hint: You can use y == c to obtain a vector of 1's and 0's that tell you % whether the ground truth is true/false for this class. % % Note: For this assignment, we recommend using fmincg to optimize the cost % function. It is okay to use a for-loop (for c = 1:num_labels) to % loop over the different classes. % % fmincg works similarly to fminunc, but is more efficient when we % are dealing with large number of parameters. % % Example Code for fmincg: % % % Set Initial theta % initial_theta = zeros(n + 1, 1); % % % Set options for fminunc % options = optimset('GradObj', 'on', 'MaxIter', 50); % % % Run fmincg to obtain the optimal theta % % This function will return theta and the cost % [theta] = ... % fmincg (@(t)(lrCostFunction(t, X, (y == c), lambda)), ... % initial_theta, options); % % Set Initial theta initial_theta = zeros(n + 1, 1); % Set options for fminunc options = optimset('GradObj', 'on', 'MaxIter', 50); for c = 1:num_labels [theta] = fmincg (@(t)(lrCostFunction(t, X, (y == c), lambda)), initial_theta, options); all_theta(c, :) = theta'; end % ========================================================================= end ``` #### One-vs-all prediction After training your one-vs-all classifier, you can now use it to predict the digit contained in a given image. For each input, you should compute the 'probability' that it belongs to each class using the trained logistic regression classifiers. Your one-vs-all prediction function will pick the class for which the corresponding logistic regression classifier outputs the highest probability and return the class label as the prediction for the input example. You should now complete the code in predictOneVsAll.m to use the one-vs-all classifier to make predictions. Once you are done, run the code below to call your predictOneVsAll function using the learned value of . You should see that the training set accuracy is about 94.9% (i.e., it classifies 94.9% of the examples in the training set correctly). ```matlab pred = predictOneVsAll(all_theta, X); fprintf('\nTraining Set Accuracy: %f\n', mean(double(pred == y)) * 100); ``` - predictOneVsAll.m https://github.com/LuyaoChen/machine-learning-ex3/blob/master/ex3/predictOneVsAll.m ```matlab function p = predictOneVsAll(all_theta, X) %PREDICT Predict the label for a trained one-vs-all classifier. The labels %are in the range 1..K, where K = size(all_theta, 1). % p = PREDICTONEVSALL(all_theta, X) will return a vector of predictions % for each example in the matrix X. Note that X contains the examples in % rows. all_theta is a matrix where the i-th row is a trained logistic % regression theta vector for the i-th class. You should set p to a vector % of values from 1..K (e.g., p = [1; 3; 1; 2] predicts classes 1, 3, 1, 2 % for 4 examples) m = size(X, 1); num_labels = size(all_theta, 1); % You need to return the following variables correctly p = zeros(size(X, 1), 1); % Add ones to the X data matrix X = [ones(m, 1) X]; % ====================== YOUR CODE HERE ====================== % Instructions: Complete the following code to make predictions using % your learned logistic regression parameters (one-vs-all). % You should set p to a vector of predictions (from 1 to % num_labels). % % Hint: This code can be done all vectorized using the max function. % In particular, the max function can also return the index of the % max element, for more information see 'help max'. If your examples % are in rows, then, you can use max(A, [], 2) to obtain the max % for each row. % [probability, p] = max((sigmoid(X * all_theta')),[],2); % ========================================================================= end ``` #### Neural Networks In the previous part of this exercise, you implemented multi-class logistic regression to recognize handwritten digits. However, logistic regression cannot form more complex hypotheses as it is only a linear classier. (You could add more features such as polynomial features to logistic regression, but that can be very expensive to train.) In this part of the exercise, you will implement a neural network to recognize handwritten digits using the same training set as before. The neural network will be able to represent complex models that form non-linear hypotheses. For this week, you will be using parameters from a neural network that we have already trained. Your goal is to implement the feedforward propagation algorithm to use our weights for prediction. In next week's exercise, you will write the backpropagation algorithm for learning the neural network parameters. ##### Model representation Our neural network is shown in Figure 2. It has 3 layers- an input layer, a hidden layer and an output layer. Recall that our inputs are pixel values of digit images. Since the images are of size 20 x 20, this gives us 400 input layer units (excluding the extra bias unit which always outputs +1). As before, the training data will be loaded into the variables X and y. ```matlab load('ex3data1.mat');m = size(X, 1);% Randomly select 100 data points to displaysel = randperm(size(X, 1));sel = sel(1:100);displayData(X(sel, :));% Load saved matrices from fileload('ex3weights.mat'); % Theta1 has size 25 x 401% Theta2 has size 10 x 26 ``` #### Feedforward propagation and prediction Implementation Note: The matrix X contains the examples in rows. When you complete the code in predict.m, you will need to add the column of 1's to the matrix. The matrices Theta1 and Theta2 contain the parameters for each unit in rows. Specically, the first row of Theta1 corresponds to the first hidden unit in the second layer. In MATLAB, when you compute , be sure that you index (and if necessary, transpose) X correctly so that you get as a column vector. ```matlab pred = predict(Theta1, Theta2, X); fprintf('\nTraining Set Accuracy: %f\n', mean(double(pred == y)) * 100); % Randomly permute examples rp = randi(m); % Predict pred = predict(Theta1, Theta2, X(rp,:)); fprintf('\nNeural Network Prediction: %d (digit %d)\n', pred, mod(pred, 10)); % Display displayData(X(rp, :)); ``` #### My Favorite One Liners 原文:[My Favorite One Liners](https://muhammadraza.me/2021/Oneliners/) - `ps aux | convert label:@- process.png` This commmand allows you to convert your shell output into an image as this makes it much easier than taking a screenshot of your shell if you want to share your output with someone. *Note:`convert utility is part of imagemagick if you don't have convert you can install by installing imagemagick`.* - `curl ipinfo.io` external ip address - `ls -d */`listing directories only - `du -hs */ | sort -hr | head` view 10 largest directories in your current directory. ### 其他阅读 #### 引导页清单 | Landing Page Checklist https://landingpage.fyi/landing-page-checklist.html Build your best landing page with 100+ hand-picked tools; landing page builders, growth tools, and booster resources. ##### Checklist - [ ] **Find a Domain Name** - Namecheap, Godaddy, Domain.com - [ ] **Build a Landing Page** - Unicorn Platform, Webflow, [Tails](https://devdojo.com/tails/app)(Tailwind) - [Dorik](https://landingpage.fyi/landing-page-checklist.html), [Versoly](https://versoly.com/templates), [umso](https://app.umso.com/) - [ ] **Find a Good Hosting** - Digital Ocean, [Cloudflare Pages(JAM)](https://pages.cloudflare.com/), Netlify / Vercel - [hostinger](https://www.hostinger.jp/), Linode - [ ] **Design a Logo** - [Looka](https://looka.com/), [smashingLogo](https://smashinglogo.com/), [myNewBrandLogo](https://mybrandnewlogo.com/) - [ ] **Discover Illustration Illustration Sets** - [Undraw](https://undraw.co/illustrations), [Blush Design](https://blush.design/), [Crowwwkit](https://growwwkit.com/#products), [Craftwork](https://craftwork.design/?ref=96), [Pixel True](https://www.pixeltrue.com/), [Storyset](https://storyset.com/) - Drawkit, Vector Creator, Ui8 - [ ] **Write Seo-Friendly Marketing Copy** - Conversion.ai, Headlime - Copy.ai, Anyword, Contentbot - [ ] **Keyword Research** - SemRush, Ahrefs, Search Console - Keyword Tool, Ubersuggest, Google Trends - [ ] **Social Proof** - Testimonial.to, Yotpo, Smile.io - Nudgify, Shoutout, Fomo - [ ] **Capture and Send Email** - Mailerlite, Klaviyo, Mailchimp, Sendinblue - Active Campaign, Sendfox, Popup Smart, WisePops - [ ] **Add Chatbot to Your Site** - Crisp Chat, Freshchat, Intercom, Drift, Papercups, Chatra - [ ] **Track Your Website Performance** - Google Analytics, Fathom Analytics, Plausible Analytics - Simple Analytics, Mixpanel, Baremetrics - [ ] **Generate Legal Documents** - Iubenda, CookieHub, Metomic, Hello Consent, Getterms - Termly, Osana - [ ] **Get Feedback & Onboarding** - Hotjar, Kampsite, Userguiding, Feedback Fish, Feedbear - Upvoty, Freeddy Feedback - [ ] **Measure Your Site's Performance** - PageSpeed Insight, web.dev, GTMetrix, Google Search Console - Screpy, Sitechecker - [ ] **Honorable Mentions** - Zapier, Grammarly, Intregomat, Appsumo, Secret, Drip - WebGazer, Getform, StoryChief #### A Checklist For First-Time Engineering Managers https://blog.pragmaticengineer.com/checklist-for-first-time-managers/ - **Team building and teaching.** Build trust on the team, execute on hiring (if they have headcount), and develop people on their team. - **Deliver results.** Ensure the team has a structure to execute on, get things done, and keep a high quality bar. - **Collaborate and connect.** Keep an open communications channel with the team, connect people and teams, and run good meetings. - **Vision.** Ensure the team has a purpose and collect team values. Involve the team in planning for what the team will do. - **Professional growth.** Keep growing as a person: set their own goals, work with a mentor, network with peers and give back. #### 人工智能学习路线图 [AI-Expert-Roadmap](https://github.com/AMAI-GmbH/AI-Expert-Roadmap) Machine Learning  Deep Learning